Improving My Website's Performance, A Penny Drops!

Posted on May 27, 2014 by Mike Nuttall

Today I take a break from the process I am going through of systematically reviewing my website against Google's Webmaster Guidelines, and talk about a realisation with what has been happening with my site during the 100 blog posts in 100 days challenge.

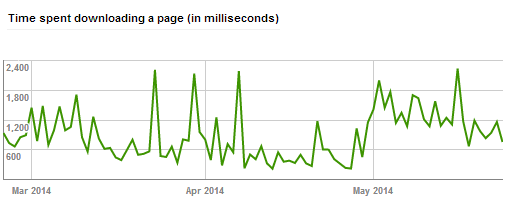

After looking at the information from webmaster tools about my site in this post, mostly the news was positive, though I discover something that needed changing concerning Onsitenow sub-domains, which I acted on; but I also noticed that the time spend downloading pages from my website by Google's web crawlers seemed to have increased steeply in May and I wasn't sure why. The time downloading had increased but the number of kilobytes downloaded hadn't changed, something wasn't quite right there.

The following day I took a closer look at the speed of my site to see what was going on. And I discovered that I had made a mistake in my code that meant the page took a lot longer to respond than it should have. I was retrieving data un-cached. When you do that the server has to do work to get the information out of the database. When you can you should always cache any data that doesn't get changed on a regular basis so that the server can serve it up quickly.

So I remedied that problem, and made everything get cached. I thought that must have been the cause of the increase in time spent downloading. But It made me think a bit more about page speed and caching. And during the process of looking into the issue I used a couple of Linux tools to measure page speed. It made me think that it would be good to have a tool that could quickly look at the page speed of all your web pages. So I wrote a script that would do exactly that.

With this script I could easily see the speed of all the pages on my website, all at once. So then I noticed that all the pages were a lot slower after I made any changes in the content management system MODX. The way MODX works is that it clears the cache for the whole site when any resource is changed and saved in the manager. This is because that resource could be appearing on any page so the cache needs to be regenerated with the new content.

Except that the new cache is only generated when someone clicks on a link to that page, the cache is then built and stored. So if you are working on making changes, or writing a blog post, every time you hit the save button you are slowing down the whole site for the next user, and if you are spending a lot of time on that post, and hitting the save button a lot (which is a very sensible habit to get into if you don't like losing your work) then that's a lot of slowed down pages over an extended period of time; and if a visitor to your site during that period happens to be the Google Bot then that's not going to be too impressive.

So for the last 25 days I have been spending much longer writing blogs, and making changes and hitting that save button and really slowing down all the pages for extended periods. That must have had something to do with the graph, and my drop in the search rankings.

So what can I do about it?

So an immediate change I made was not to write my blog posts in MODX. I am now writing this in Dreamweaver, which, coincidentally, is much less frustrating to use than the text editor I had installed in MODX. So I can hit the save button as many times as I want without fear of slowing my website down. When I have finished writing it, and done the proof reading I'll cut and paste it into the blog, and hit the save button just the once.

Then I will run my script that finds the speed of all the pages because a side effect of running that is that it calls the page and therefore regenerates the cache for every page. If I run it again immediately afterwards I can see they all load much faster.

Also, while researching MODX's cache I read an excellent article by JP Deveries about the different cache related plugins for MODX. One of which is called Regencache which sounded ideal, but unfortunately I couldn't get it to work. I need to look into that further....

But another plugin called xFPC got mentioned. This doesn't solve the problem of regenerating the cache but it does a better job of caching the MODX pages in the first place and it speeded up my pages considerably. (It also does some other clever stuff with Ajax calls that I need to look into)

Also Statcache looks well worth exploring for super fast sites as it makes static files of all the web pages and uses server rewrite rules thus bypassing MODX and PHP altogether.

Web development, what did i do today?

My timetable:

| 5 hours 43 minutes | Websites: writing tool to measure speeds of every page on a website, blog writing, looking at WC3 results, checking search rankings, checking broken links |

| 3 hours 15 minutes | Admin: Sorting out my accounts |

| 37 minutes | Admin: sorting out some Windows 7 issues, email, changing passwords |

| 12 minutes | App development: looking at some app features |

| 20 minutes | Networking, organizing a meeting, thinking about a talk |

Total: 10 hours 9 minutes

Got some boring chores (doing my books and records) out of the way.

Exercise: Half an hour gardening warm up followed by a 10k run.

Tomorrow: More blogging, client work and networking.